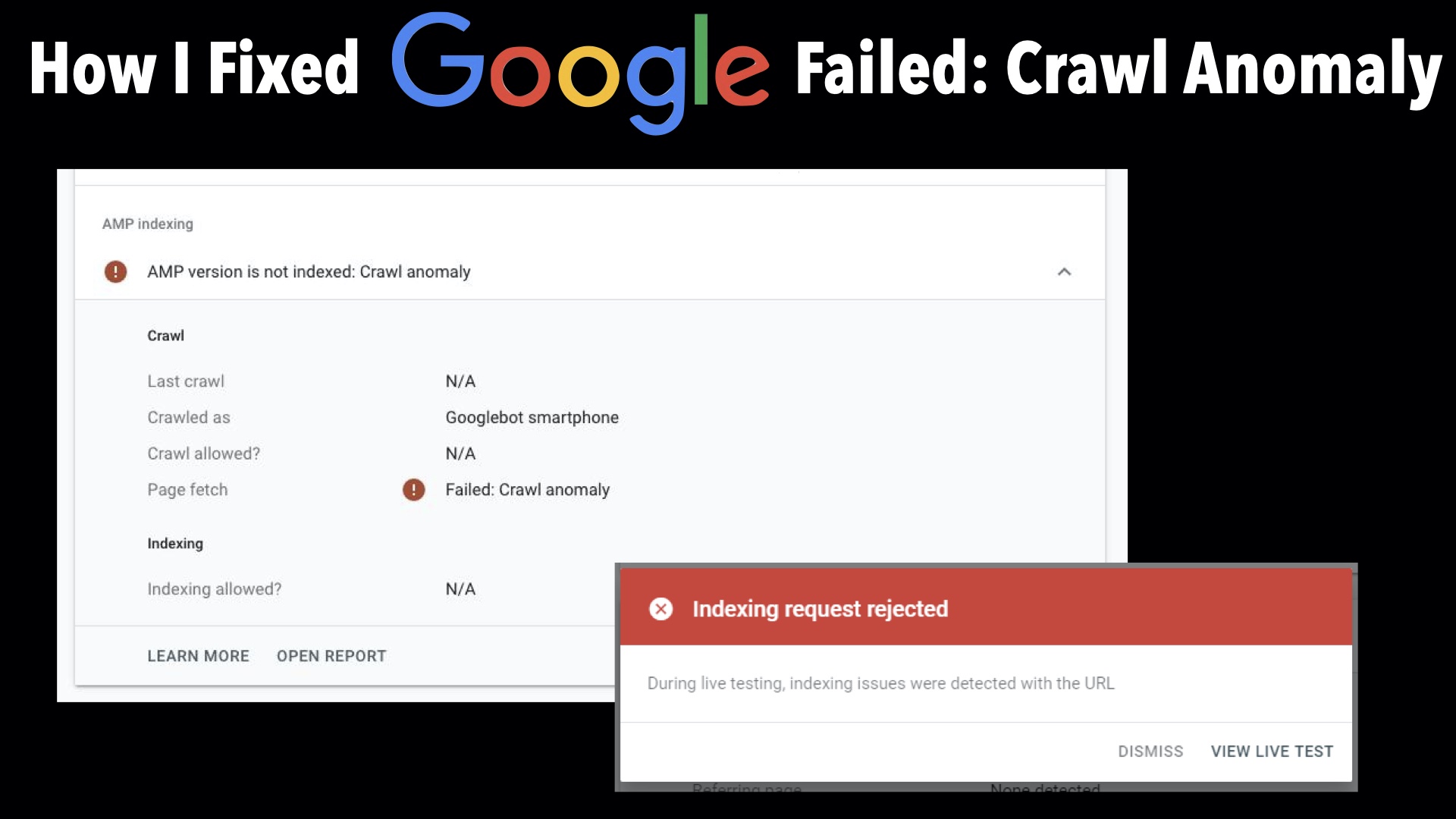

Three months ago, I shared with you guys how I fixed AMP errors that suddenly started plaguing my site. Last week another issue came up. Google stopped indexing my site especially the AMP pages and started throwing up Failed: Crawl Anomaly errors.

After much research, I discovered that the issue was that Google bot was suddenly having issues accessing my site. Like it had been somehow blocked from ever crawling my site properly.

This quick guide will show you how I was able to fix this but first make sure your robots.txt or htaccess file hasn’t undergone any recent changes that is suddenly making it to block bots.

NOTE: This guide is unfortunately targeted at those on dedicated hosting or VPS. If you’re on shared hosting, you’d most likely have to contact your web host to implement these changes.

Video Transcript

Log into WHM and go to ConfigServer Security and Firewall. Scroll down to the lfd – Login Failure Daemon section, select csf.rignore, Reverse DNS lookup and click on Edit.

Copy and paste the URLs for the major search bots into the file and click on change to save and restart the firewall.

You can find a list of these bot urls down below.

.googlebot.com

.crawl.yahoo.net

.search.msn.com

.google.com

.yandex.ru

.yandex.net

.yandex.com

.crawl.baidu.com

.crawl.baidu.jp

The next step is to edit the modsec2.user.conf file. To do this go to ConfigServer ModSec Control. If you can’t find it, that means it’s not preinstalled on your server. You can install it on your own using this guide or get your VPS host to do that for you as I did in my case.

So in ModSec Control, scroll down to ConfigServer ModSecurity Tools, select modsec/modsec2.user.conf and click on edit. If you’ve never edited this file before, it should be blank. Copy and paste the following code into it, save it and apache will restart and you’re done.

HostnameLookups On

SecRule REMOTE_HOST “@endsWith .googlebot.com” “allow,log,id:5000001,msg:’googlebot'”

SecRule REMOTE_HOST “@endsWith .google.com” “allow,log,id:5000002,msg:’googlebot'”

SecRule REMOTE_HOST “@endsWith .search.msn.com” “allow,log,id:5000003,msg:’msn bot'”

SecRule REMOTE_HOST “@endsWith .crawl.yahoo.net” “allow,log,id:5000004,msg:’yahoo bot'”

SecRule REMOTE_HOST “@endsWith .yandex.ru” “allow,log,id:5000005,msg:’yandex bot'”

SecRule REMOTE_HOST “@endsWith .yandex.net” “allow,log,id:5000006,msg:’yandex bot'”

SecRule REMOTE_HOST “@endsWith .yandex.com” “allow,log,id:5000007,msg:’yandex bot'”

SecRule REMOTE_HOST “@endsWith .crawl.baidu.com” “allow,log,id:5000008,msg:’baidu bot'”

SecRule REMOTE_HOST “@endsWith .crawl.baidu.jp” “allow,log,id:5000009,msg:’baidu bot'”

To see if all is working fine with the new configuration, go to ModSecurity Tools and you should see the bots showing up under Rule ID.

By the next day after implementing these changes, when I checked Google Search Console, Google had started reindexing my posts again as you can see indexed posts started to fall from July 10 and started rising again from July 16 after I fixed it.

I hope this helps to fix the problem for you guys.

Don’t forget to please like, share, comment and subscribe if you found this helpful. Take care guys.

H/T: Whitelist Google, Bing, Yahoo, Yandex, Baidu bots in csf and mod_security

Please Rate This Post:You’ll also like:

Please share this article to help others. Thanks